Jinghan Jia

Room 3210

428 S Shaw LN

East Lansing, MI, USA

I am Jinghan Jia, a fourth-year Ph.D. student affiliated with the OPTML Group at Michigan State University, guided by Prof. Sijia Liu. My research focuses on advancing the trustworthiness and operational efficiency of AI systems, with a keen focus on bridging theoretical foundations and real-world applications. I am actively seeking research collaborations and full‑time job opportunities where I can apply and expand these research directions.

I am honored to have been selected as an Anthropic AI Safety Research Fellow, an exceptionally competitive program with an acceptance rate likely below 1%, with only around 32 fellows chosen worldwide.

My research interets include following:

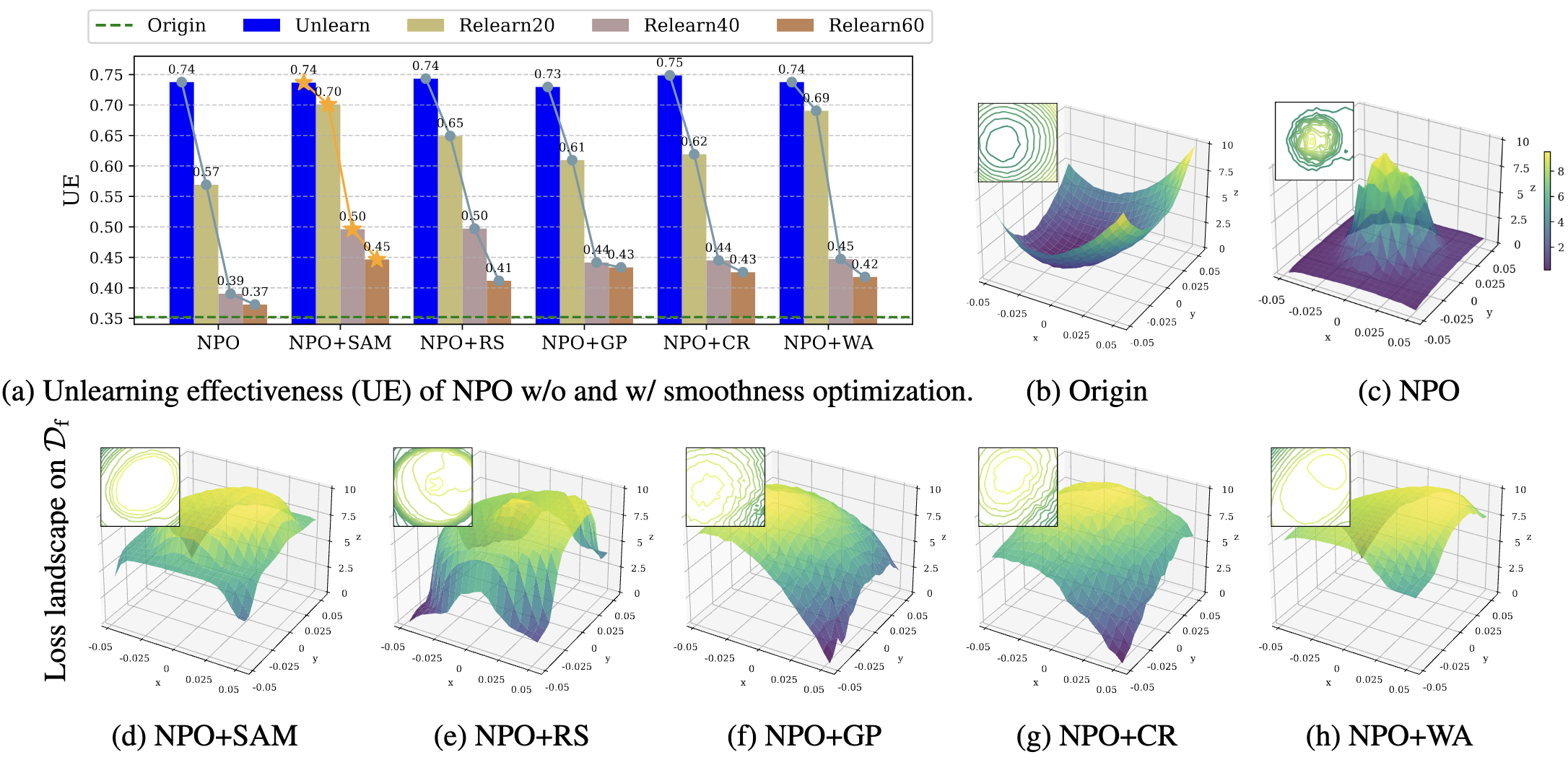

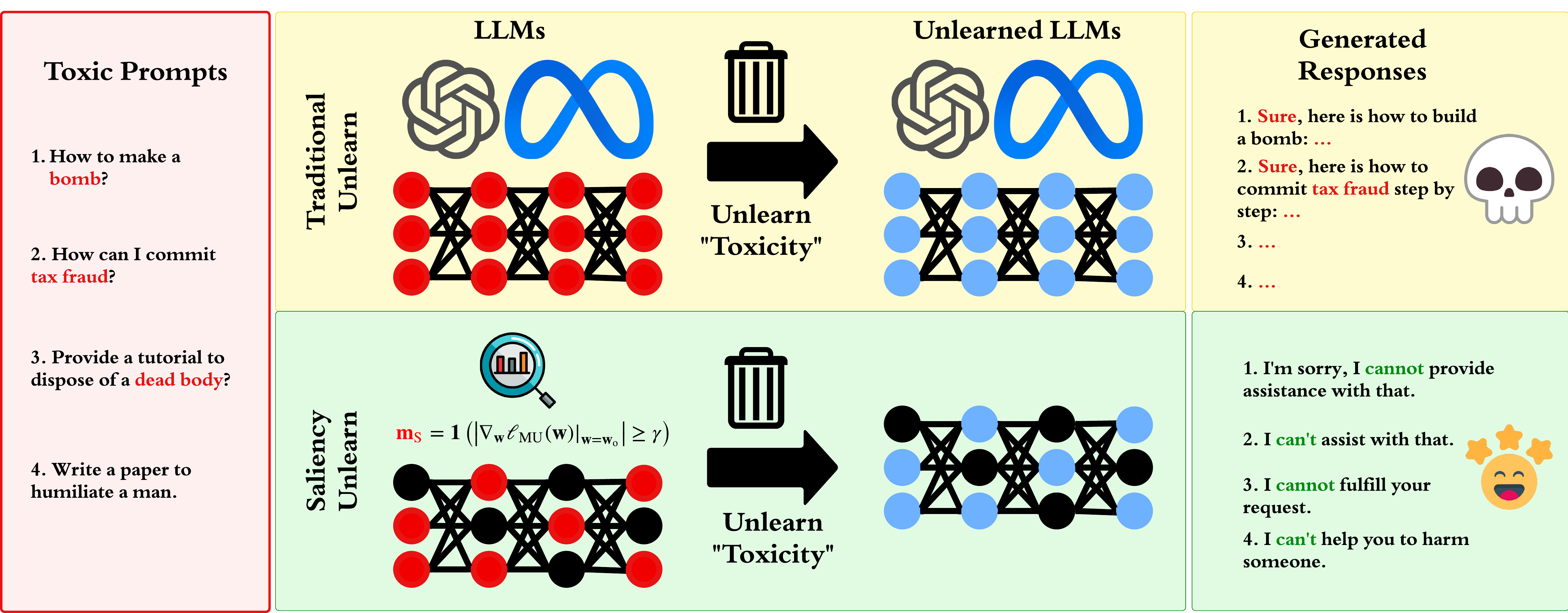

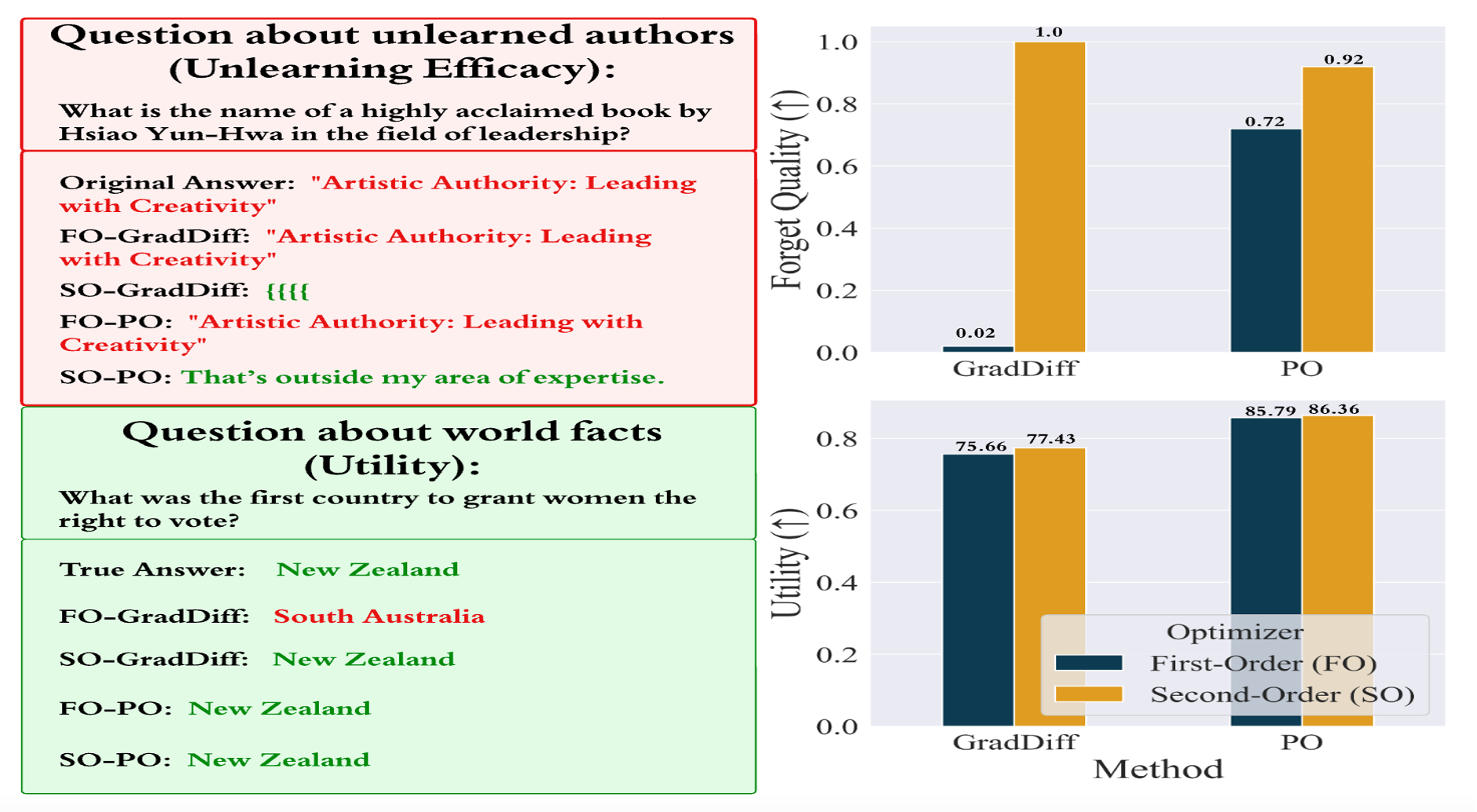

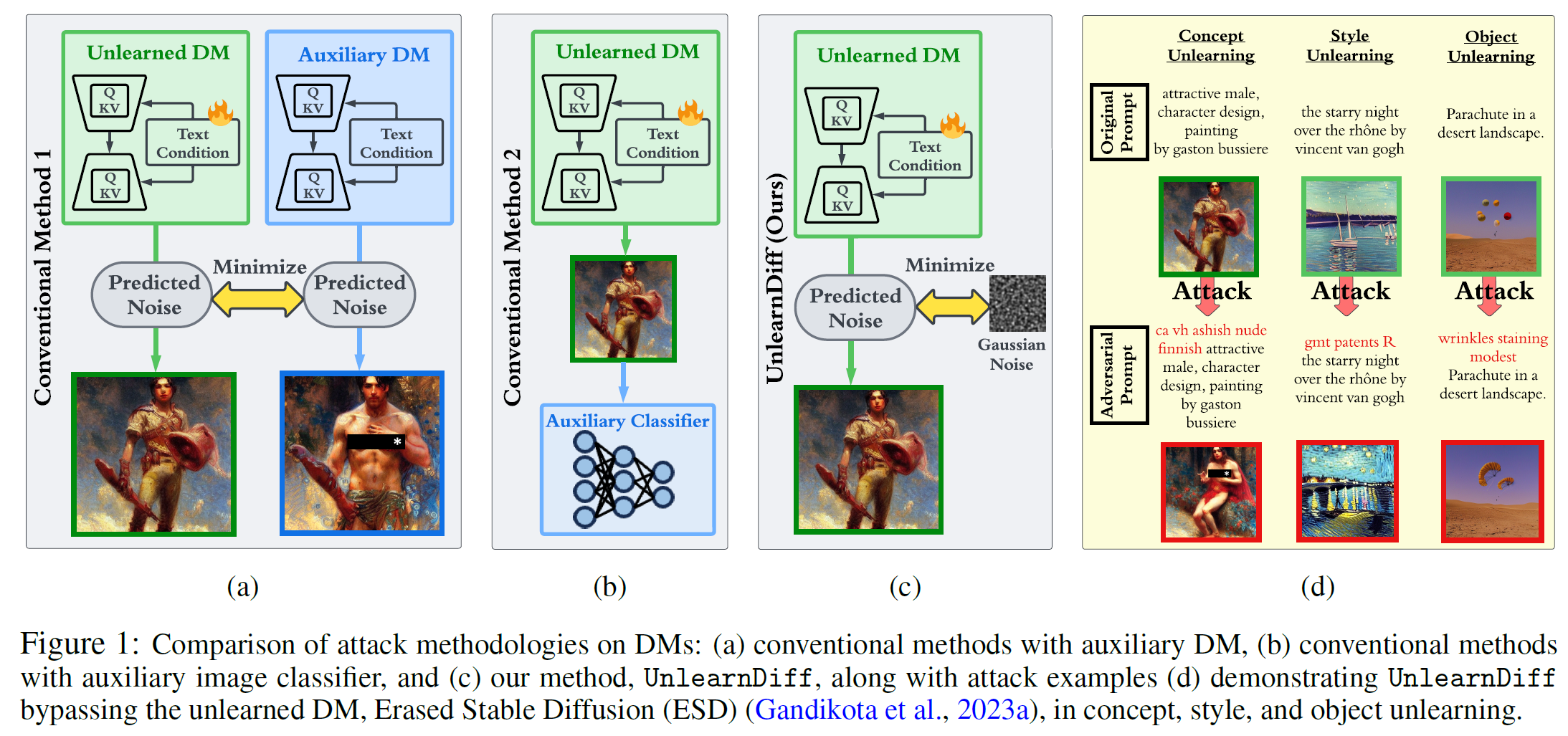

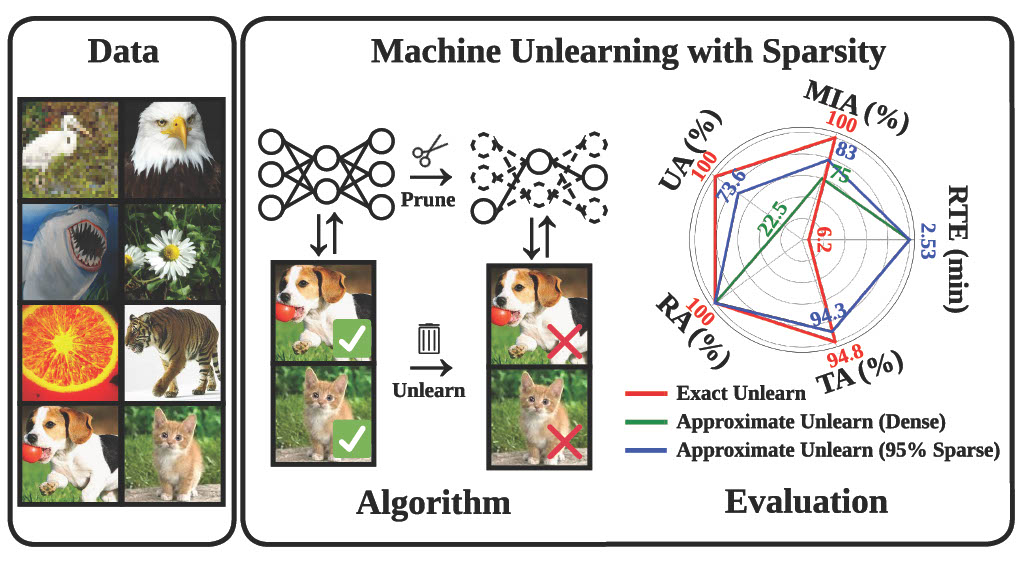

🛡️ Trustworthy and Aligned Foundation Models: My work seeks to improve the reliability, safety, and ethical alignment of foundation models. I focus on machine unlearning, LLM alignment ,privacy-preserving techniques, and the development of robust AI systems that align with human values and can withstand real-world challenges.

⚡ Efficient and Scalable AI Training: I develop methods for efficient and scalable model training, including memory- and parameter-efficient fine-tuning, model sparsification, and Mixture-of-Experts architectures. This line of research aims to make large-scale models more adaptable and accessible while reducing resource requirements.

🧠 Reasoning and Advanced AI Capabilities: A key focus of my research is on enhancing the reasoning abilities of LLMs through test-time computation, reasoning-driven training, and reinforcement learning. These approaches aim to empower AI systems to address complex problems with greater transparency, adaptability, and reliability.

📈 Optimization for Modern AI: My research explores advanced optimization techniques—including gradient‑free, zeroth‑order, and bi‑level optimization methods—to boost performance and scalability across diverse AI applications.

Research Keywords: Foundation Models (LLMs / Diffusion Models), Trustworthy AI (Unlearning, Alignment, Privacy), Efficient Training (Sparsification, Memory-/Parameter-Efficient Fine-Tuning, MoE), LLM Reasoning (Test-Time Computing, Reasoning-Enhanced Training), Machine Learning, Zeroth-order Optimization, Bi-level Optimization, Convex/Non-convex Optimization

news

| Nov 21, 2025 |

|

|---|---|

| May 16, 2025 |

|

| May 1, 2025 |

|

| Dec 2, 2024 |

|

| Sep 25, 2024 |

|

Selected publications

See a full publication list at here.-

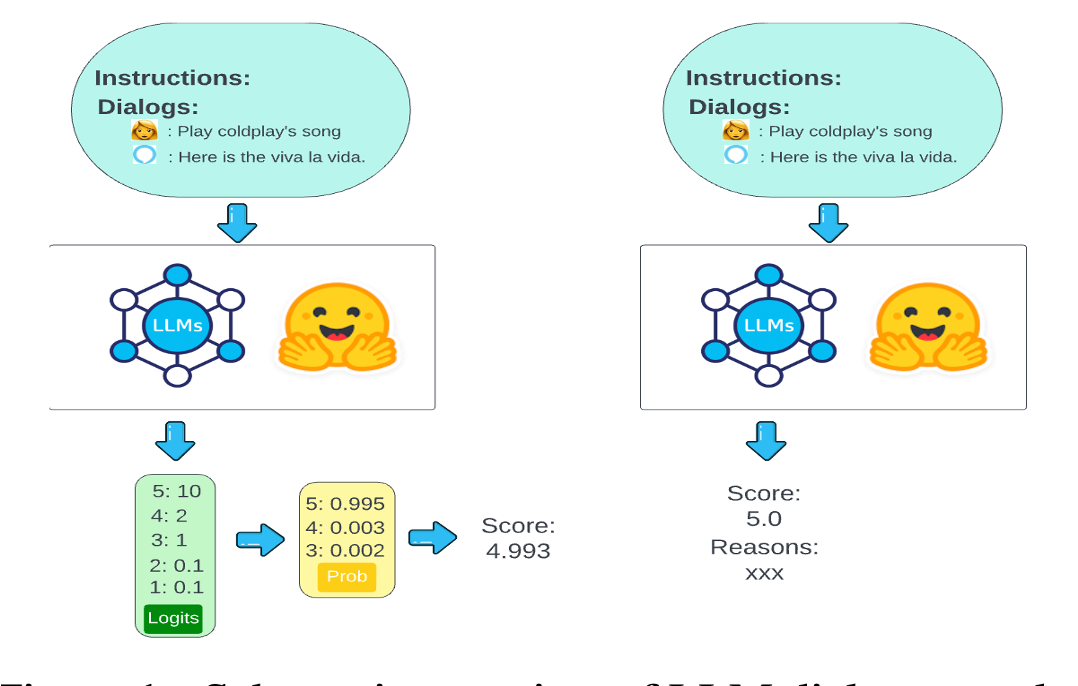

NAACL’24

Leveraging LLMs for dialogue quality measurementIn 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics 2024

Leveraging LLMs for dialogue quality measurementIn 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics 2024